Centralising AWS Networks using Resource Access Manager (RAM)

TLDR: if you want to get to the meat and potatoes of this post 🥩🥔 you can find my example of centralising an AWS network with RAM using terraform right here - github repo - This repo allows you to configure a secure, centralised network account within AWS.

For further narration please read on...

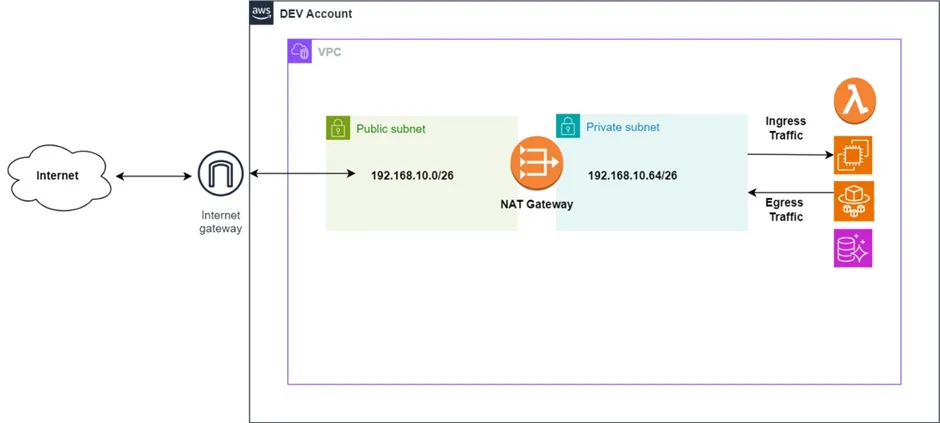

When first designing the network schema of a group of AWS accounts under an organisation, it is a common strategy for most engineers to provision internet access from each of their individual workload accounts. As a result, each AWS account within the organisation manages their own network intricacies and internet access.

In small and simple AWS networks delegating the responsibility of the network resources and management of ingress/egress network access to a single workload account is manageable. A Private AWS service uses a private subnet which is configured with a route table directing outbound traffic to a NAT gateway, which respectively uses a public subnet.

The NAT gateway, in turn, facilitates the translation of private IP addresses to public IP addresses before the egress traffic is directed through the Internet Gateway and out into the internet. The exact same is done in reverse with incoming traffic.

Simple enough right?

For AWS accounts used for testing, labs or even very small organisations, this network architecture is the most logical and straightforward. But what happens when the size of our organisation and number of workload accounts increase?

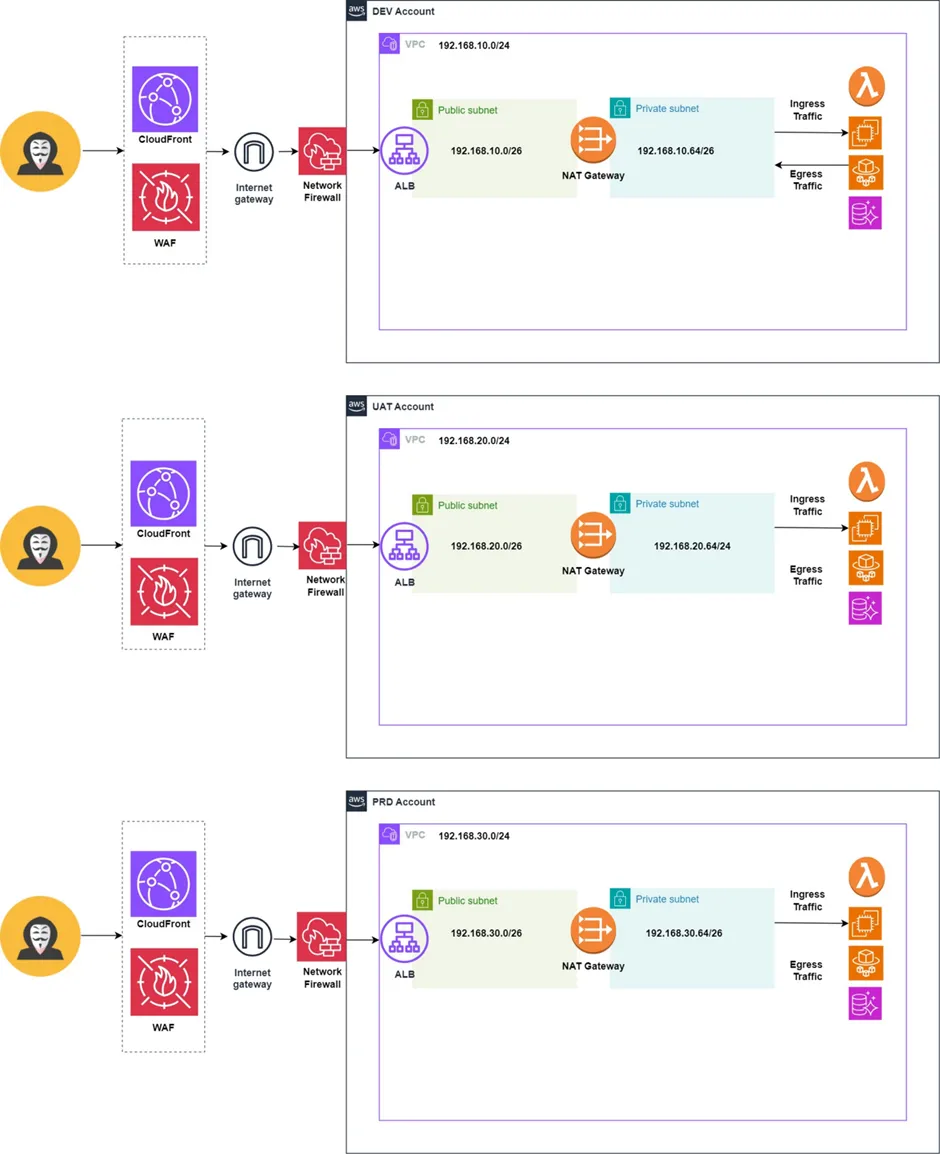

As you can see, as we begin to scale our workload across several new AWS accounts, the complexity of our network architecture quickly becomes more apparent.

Creating multiple account-specific network resources not only introduces additional costs but also increases the number of potential attack vectors within the organization. This can hinder our ability to maintain a solid set of network compliance standards.

While managing three separate workload accounts may still be manageable, the complexity grows dramatically as we add 5, 10, 20 accounts or more.

If there is a need to go beyond the standard VPC, internet gateway, NAT and subnet resources and provision CloudFront Distributions, WAFs, Network Firewalls, AWS Shield etc, then the multiplication of these resources across each new account can lead us to a nightmare scenario.

A multi-account network architecture can lead to increased administration overhead, escalating costs, and a risky expansion of our network attack surface. However, this can all be nullified if we shift our strategy to a more centralised network architecture instead.

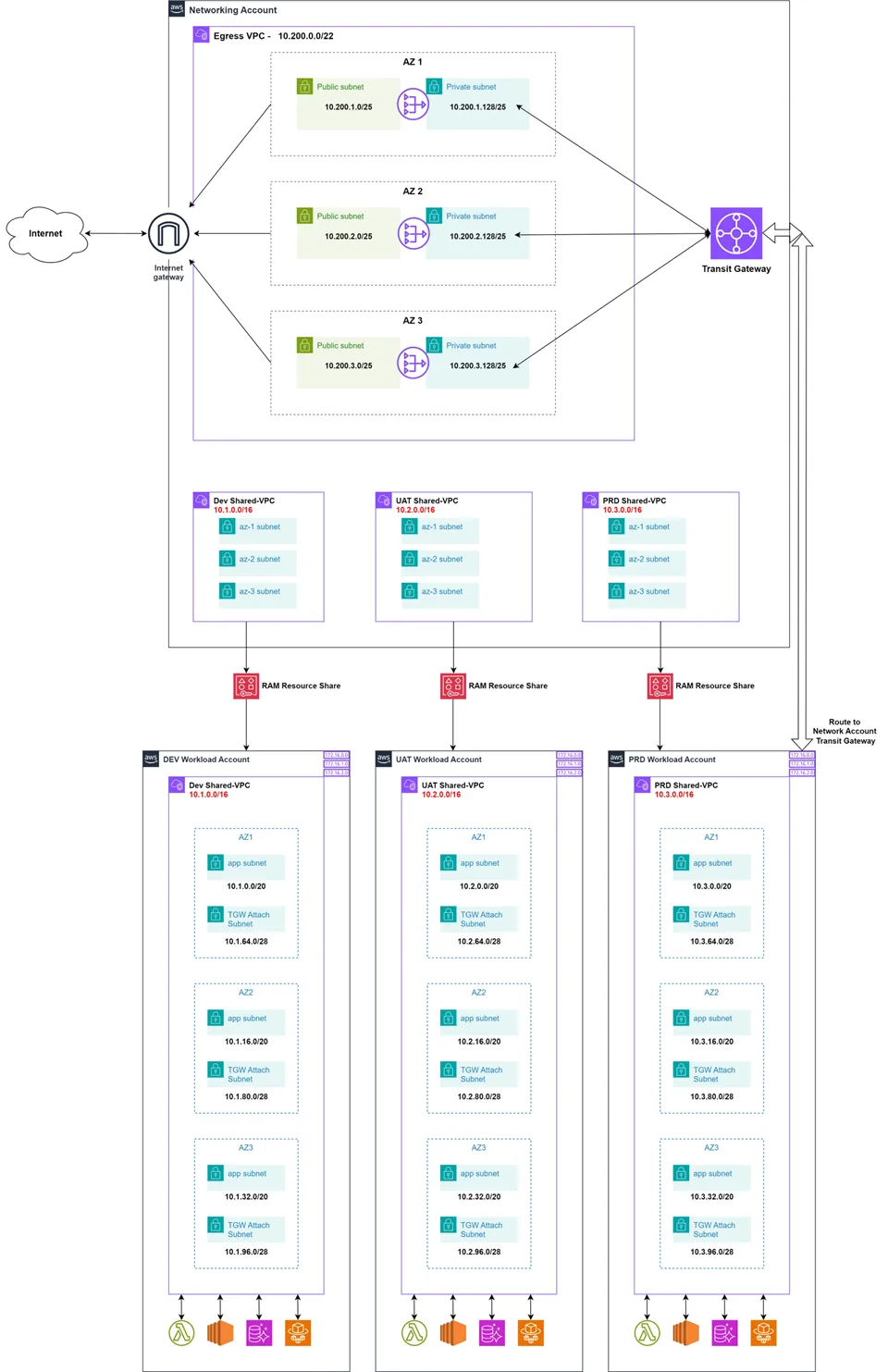

Now, I know, at first glance, this may seem more complex (and even a little bit bizarre compared to the conventional configuration) but what we see here is a fully centralised “Hub and Spoke” network topology.

The centralised network account allows us to inspect all of our traffic at one point, control ingress & egress traffic, reduce costs and reduce complexity by eliminating the need to maintain multiple network configurations in each individual workload account.

In order for us to achieve this architecture, there are 2 core services within this network topology that we need to understand:

- The use of Resource Access Manager (RAM) to facilitate the cross-account sharing the VPCS and & their respective subnets to workload accounts.

- The use of Transit Gateway as a means to route traffic to and from the Networking Account (the “Hub”) to the workload accounts (the “spokes”).

If we inspect the diagram above closer, the use of RAM can be seen in our creation of 3 separate VPCs (Dev, UAT, PRD), each with 3 separate subnets nested within each availability zone they reside in (AZ1, AZ2, AZ3).

RAM enables us to share the DEV VPC and its subnets from the networking account with the DEV workload account, the UAT VPC and subnets with the UAT workload account, and the same for the PRD account.

While services in each of the workload accounts may have permission to use the VPCs and subnets that have been shared from the networking account, they do not have the ownership needed to delete or modify them.

This ownership is solely reserved for the centralised network Account that these resources were created in. From a security and compliance perspective this is a massive win.

The size of the potential network attack service that can be exploited by cyber bad guys is greatly reduced, as all network architecture resources are locked down in the network account.

The Transit Gateway (TGW) is used as the physical hub for routing traffic between the ingress/egress network account and each of the workload accounts.

Traffic is routed to and from this Transit Gateway from each of the shared VPCs route tables, which forwards traffic towards the network account from a specific set of shared subnets (prefixed with "TGW") in each availability zone.

This is what the journey of ingress and egress internet traffic looks like when utilising this centralised hub-spoke network architecture: (NA means the resource is within the Network Account, while WA means the resource is location inside the prd/uat/dev workload accounts).

- Outside internet request ➡️

- Internet gateway (NA) ➡️

- Public egress subnet (NA) ➡️

- NAT Gateway(NA) ➡️

- Private egress subnet (NA) ➡️

- Transit gateway (NA) ➡️

- PRD VPC (WA) ➡️

- TGW Subnet (WA) ➡️

- App Subnet (WA) ➡️

- AWS resources utilising the app subnets.

In conclusion, using RAM to create a centralised network architecture for our AWS accounts and organisations offers us powerful solution for mitigating the downfalls of a fragmented AWS network posture.

By adopting a centralised "Hub and Spoke" network topology, organisations can achieve enhanced security, reduced operational complexities, and cost-effectiveness as they scale their AWS infrastructure.

Give it a try - centralised RAM network example repo

thanks for reading,

Mehmet