Docker Deep Dive by Nigel Poulton Review - Chapter 1: The History of Docker

Nigel Poulton is a technical author who specialises in emerging technologies, including docker, kubernetes and, at present, Wasm. When I have previously read Poulton's works on various technologies I found his writing style to be simultaneously engaging, educational and, at times, absolutely hilarious.

With his new, 2025 edition of Docker Deep Dive released only a few months ago, I thought it would be a good idea for me to go through all 16 chapters of the book as an excuse to do my own "deep dive" of the Docker ecosystem.

Even though I frequently use docker professionally (mainly in synergy with cloud managed technologies and cicd pipeline architecture), I have found in the past that going back to the basics of a technology we take for granted can only be a good thing.

Similar to how the best boxer will incessantly practice his jab on the leather bag, or a Muay Thai fighter practices their teep kick thousands of times each week, as technology professionals we shouldn't shy away from also sharpening our base skills in the most core technologies we work with on a daily basis.

Especially given how much they are relied on for more and more technological abstractions that increasingly sit and rely on these systems (I'm looking at you and your $150 million per day running costs OpenAI💰🦿).

Everyone has a plan until Docker's "Killed by OOM" error message punches you in the face

Everyone has a plan until Docker's "Killed by OOM" error message punches you in the face

In order to make this more fitting for a blog and not a containerised rendition of Tolstoy's War and Peace, I've decided to dedicate each of the 16 future posts to 16 chapters in the book. I'd highly recommend you read the book for yourself, but if you want a more concise overview of each of the chapters subjects (docker architecture, compose, swarm, security etc), then read on and we'll dive in 🥽.

Containers From 30,0000 feet

The journey to using containers as (one of) the main modes of compute in 2025 has been long, arduous and trailed with various inadequacies. In the decades prior to the container or even to the VM, due to the lack of experience in modelling performance requirements, businesses were left with no choice but to estimate exactly how many bare-metal servers their applications needed guess work alone.

As Poulton notes, because nobody wanted to deal with underpowered servers that would be unable to handle their business applications, racks and racks of highly expensive and overpowered on-prem mainframes and workstations were bought just to make sure that there was enough compute resources available.

However, this practice was extremely wasteful. Especially given the fact that it was not uncommon for these servers to only be using 5-10% of their entire capacity during server run-time.

The inability to scale fast, economically and easily meant that if an application needed another 2-5% of CPU, an entirely new server (think IBM RS/6000s or HP Superdomes) would have to be bought just to meet that miniscule increase in extra compute requirement.

Hello VMware

Towards the end of the 1990s, something big happened. VMware, inc gave birth to what would commonly be called the virtual machine or the VM. This technology allowed multiple business applications to run simultaneously on each of these virtual machines, while still being hosted on a single bare-metal server.

Up until Broadcom's acquisition in 2023, VMware still had a thriving community of users. Unfortunately 300-1000% increases in license costs and greedy, rapid monetisation of the business has caused an exodus of even its most dedicated fans.

As Poulton puts it, this was an enormous game changer. Applications could now run on the spare 50-70% unused capacity of existing servers and be set up much faster, as the arduous process of setting up an entirely new bare-metal servers was removed from the delivery pipeline.

Most importantly, VMware sparked the revolution in changing servers from a rigid, singular physical entity, to something that could be dynamic and abstracted into smaller software defined units of compute. Undoubtedly, this laid down the ground work the cloud-native architectures we use today.

Hello Containers!

However, even though VMware was a huge leap and progress in server technology, it still came with what Poulton refers to as VMwarts. Every Virtual Machine still needed it's own dedicated OS, and each OS consumes CPU, RAM and other resources which would be better spent on actually running applications.

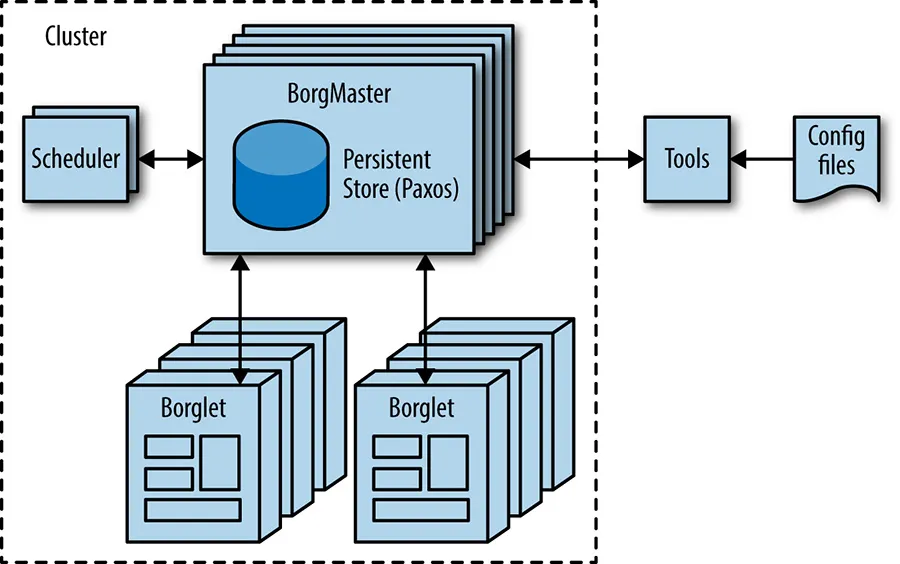

While the vast majority of companies were still using VMs for almost all of their computational needs, Google had already leaped into using containers. Based on the isolated, Linux-kernel based cgroups (control groups), the first containerisation technology that was used internally by Google was Borg.

Despite being developed all the way back in 2003, allegedly Borg is still widely used for Googles core services.

Despite being developed all the way back in 2003, allegedly Borg is still widely used for Googles core services.

A useful feature of the container model, compared to VMs, is that every container shares the OS of the host it's running on. Poulton notes that this reality is what allowed container technologies to make the quantum leap over the Virtual Machines. Where a host could run 10 VMs, it would equally be able to run 50 containers, making them inherently more efficient.

Borg's ability to scale managed clusters with tens of thousands of machines and billions of containers weekly allowed it to become a core part of Google's internal infrastructure, and a trailblazer project for other companies to follow. The era of container dominated applications had been born and set in motion.

Hello Docker 🐋

If Borg was the IBM Simon (the first smartphone in the world, see pic below), then Docker would be the iPhone. While Borg could be incredibly complex and difficult to understand if you weren't part of Google's internal ops team, Docker provided the magic needed to make Linux containers easy and bring them to the masses.

I know it's pretty hideous, but I bet an iPhone couldn't hold a candle to its battery life.

With it's clean CLI command structure, declarative configuration through the Dockerfile and pre-built images, Docker became the reigning champion of containerisation technologies after it's launch in 2013 (more on this on my review of Poulton's 2nd Chapter).

Eventually, when Microsoft saw the writing on the wall and the undeniable increased efficiency, speed and portability of containers, the company worked very hard to bring containerisation based technologies to the Windows platform.

Although Windows 10 & 11 allow you to use the WSL 2 (Windows Subsystem for Linux) in unity with the Docker Desktop application, and run Window containers on your Desktop, the vast majority of containers running in the world today are still Linux containers.

Wasm

If we thought we couldn't abstract our servers any smaller than a Docker container, we were mistaken. Wasm (WebAssembly) is a binary instruction set that allows apps to be run in an even smaller, faster, portable and secure manner than currently used technologies allow.

As a portable compilation target for over 40 different languages, Wasm allows developers to leverage existing codebases without having to rewrite them. The result is applications being run in secure, isolated environments, using tiny kilobyte-sized binaries that can start-up in microseconds.

Though, Poulton argues, Wasm is still an early project with a lot of work to be done before widespread adoption can be expected. The standards are still yet to be developed and containers still remain the dominant model of cloud-native applications, due to the richness of it's surrounding ecosystem. Apparently there will be an entire chapter dedicated to Wasm and it's latest Docker related developments, which I'm excited about.

Chapter Summary

We used to live in a world where all business applications needed a dedicated server for it to work. In many cases these servers were overpowered and suffered from the inefficiencies of having mass, spare compute power being used for nothing. Following the successful development of VMware and hypervisors, a new and even more portable version of virtualisation technologies called containers came about.

After Google's successful, yet complex, management of their internal workloads using Borg, Docker eventually became the kingmaker of all containerisation technologies to truly bring containers to the masses.

With new developments of Wasm and AI-LLM related container images, Docker still has a bright future ahead and will still constitute as the base of future innovations in application and server workloads for many years to come.

Thanks for reading, stay tuned for the chapter 2 of Docker Deep Dive, where we'll be going deeper into the standards of Docker and container related projects,

Mehmet