Docker Deep Dive by Nigel Poulton Review - Chapter 2: Docker and container-related standards and projects

Chapter 2 of Docker Deep Dive focuses on two separate parts: Docker, Inc and the Docker platform.

Docker, Inc

First founded by French-American engineer Solomon Hykes, Docker, Inc. started in Palo Alto as a platform as a service (PaaS) provider called dotCloud. dotCloud delivered its services to clients on top of a variety of container related in-house tooling.

The very early days of dotCloud, when the predecessor to Docker was unknown and all it's Devs worked in a jungle

The very early days of dotCloud, when the predecessor to Docker was unknown and all it's Devs worked in a jungle

One of these tools, which was based on the Python CLI, acted as the frontend to LXC (a low-level linux-container virtualisation technology), managed container images and could automatically create it's own LXC configuration file by invoking lxc-start. This tool was called dc, or the dotCloud container engine and would be the ancestor of Docker as we know it today.

By 2013, dotCloud would shed their struggling PaaS side of the business and rebrand themselves as Docker, inc - named after a internal side project that they had been working on for several months. The course of computing history had been changed forever as Docker, inc would focus on bringing Docker and containers to the world.

The Docker Technology

After years of toil and failure, Docker would eventually trailblaze the entire tech industry for container adoption, and become the tool that is widely loved and respected today. At a high level, there are two fundamental parts of the Docker platform that have made it so easy to build, share and run containers:

- The CLI (client) 📟

- The Engine (server)🔧

The CLI is the part of Docker that you're probably most familiar with; a command-line tool that can convert simple commands into API requests before sending them to the Docker engine to deploy or manage your containers:

docker build -t jurrasic-park .

docker run -d -p 8080:80 jurrasic-park

The Docker CLI is handled by standard UNIX-formed arguments and can handle long and short forms of these arguments. We can change the commands above to match long form arguments instead, to tag the jurassic-park image but also publish it's ports to the host when we run it:

docker build -tag jurrasic-park .

docker run -detach -publish 8080:80 jurrasic-park

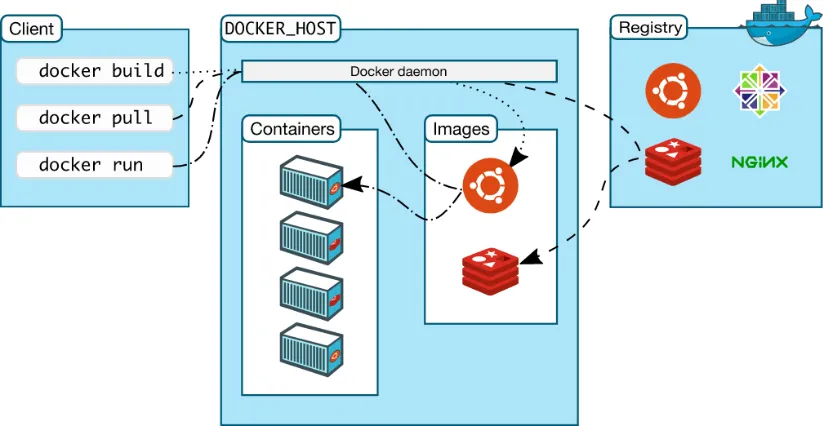

On the other side, the Docker engine is the brain behind the CLI, and has the responsibility of taking in these commands that are first converted to API requests before being handled by the engine or Docker daemon.

As Poulton notes, the relationship between CLI and engine are quite complex and hidden behind the client, which is what makes Docker so simple to use. Later on in the book, Poulton will dive deeper (as the title of the Book goes) into these topics where we will see just how many parts the Docker ecosystem is made up of.

The Docker Architecture - simplified to it's very core functions

The Docker Architecture - simplified to it's very core functions

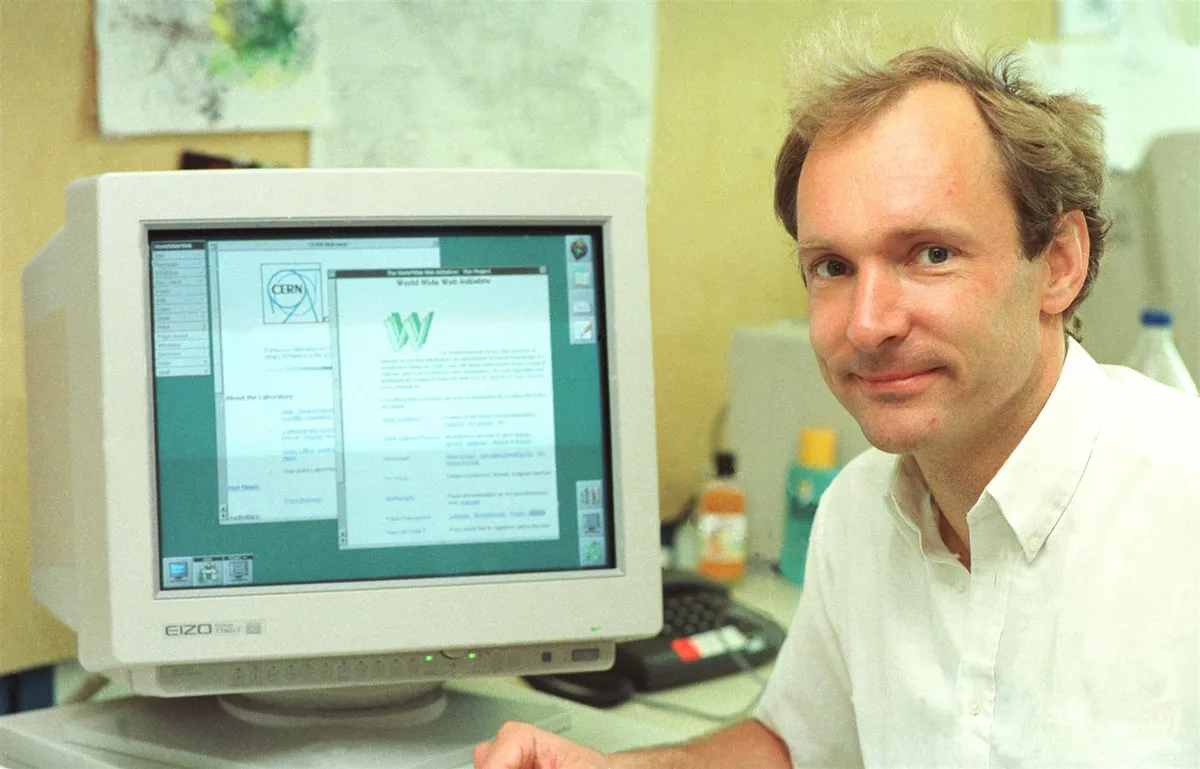

Open Container Initiative - OCI

Standards are extremely important in technology. If we take the World Wide Web as an example, despite evolving massively over the past 35 years, it has done so with the built-in core standards of HTTP, HTML and TCP/IP as a non-negotiable element to it's use and development.

With the standards being backwards compatible, and the Internet Engineering Task Force (IETF) ensuring that no single company controls these standards or creates any form of vendor-lock-in, this has allowed competition to flourish in the WWW space by creating a level playing field for tech giants and start-ups alike to compete fairly.

In similar fashion, under the umbrella of the Linux Foundation, the Open Container Initiative was founded in 2015 when people at a company called CoreOS began to dislike the way Docker began to dominate the container ecosystem.

By creating an open standard called appc that defined specification standards for integral parts of containers like the runtime engine and image format, Docker was put in the awkward position of having to deal with two separate, competing standards.

Tim Berners-Lee could have become the worlds first Trillionaire if he had patented HTML, HTTP and URLs. Instead he had the extremely admirable commitment to keeping the web free and open from its inception. What a boss.

Tim Berners-Lee could have become the worlds first Trillionaire if he had patented HTML, HTTP and URLs. Instead he had the extremely admirable commitment to keeping the web free and open from its inception. What a boss.

If we can imagine adopters of TCP/IP competing with a completely different set of internet standards and the chaos that would ensue, this divergence of container standards could have seriously slowed-down adoption or even spelt an end to the technology as a whole.

Fortunately the main players of the ecosystem came together and collaborated to form the OCI as a vendor-neutral council that would govern all container standards moving forward. As of today, the OCI maintains the image, runtime and distribution standards for all low-level container specifications.

Poulton compares these OCI standards to the rail tracks analogy: After the size and properties of railway tracks were standardised in the 1800s, it gave entrepreneurs and businesses in the rail industry confidence that new products they built would work on these standardised railways.

In similar fashion, since the OCI has taken control of container specifications and standards, the container ecosystem has flourished across the entire tech industry as it is recognised as a secure, stable, open and free technology that can be used by all companies (and individuals).

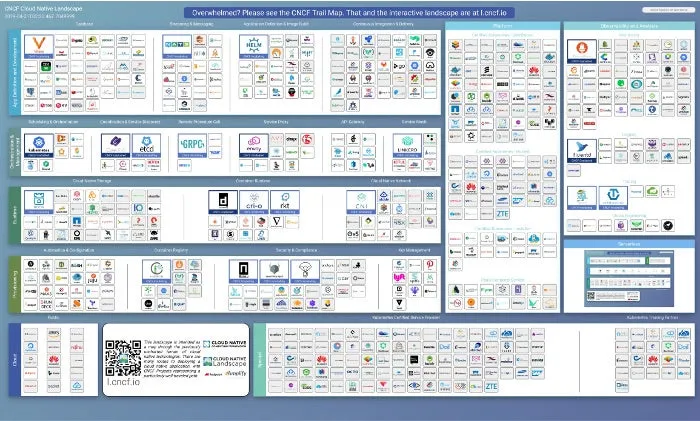

Cloud Native Computing Foundation - CNCF

The CNCF is another Linux Foundation project that has been crucial to the development and production of new container technologies. It was founded in 2015, the same year as the OCI, with the goal of Advancing container technologies and making cloud native computing ubiquitous.

While the OCI is in charge of creating and maintaining container-related specifications, the CNCF is the main assembly body for a variety of cognate projects, such as Kubernetes, containerd, Notary, Prometheus and many others.

By hosting these projects, the CNCF provides a space, structure and collective support for the projects to grow and mature. In order for this to happen, a project has to pass through 3 separate phases:

- Sandbox ⏳

- Incubating 🪺

- Graduated 🎉

A project passes through each of these levels of maturity by growing in the quality of governance, marketing, documentation and community engagement that it receives. A new project accepted as a sandbox project by the CNCF might be underpinned by great technology and future ideas, but may need help with governance to reach the level of graduation. To see the full breadth of current CNCF projects under development, click here

If you have an entire weekend to yourself you might just have enough time to go through all of the CNCF projects

If you have an entire weekend to yourself you might just have enough time to go through all of the CNCF projects

The Moby Project

Finally, the Moby project was created by Docker as a community-led space for developers to work on specialised tools for building container platforms. From Dockers perspective, this project can be used as an "open R&D lab", to experiment and development new components within the ecosystem of future container technologies. Originally created in 2017, the project now has members of Microsoft, Nvidia and Mirantis as part of it's cohort.

Chapter Summary

Docker, Inc were the first movers and instigators of the modern container revolution in the world. However, with the deep influence of open-source culture, the OCI, CNCF and Moby Project eventually came out of Docker's initial dominance which has led to a much more community driven development of container technologies.

Thanks for reading, stay tuned for the chapter 3 of Docker Deep Dive, where we'll be going deeper into how exactly we can install and use Docker on a variety of operating systems,

Mehmet